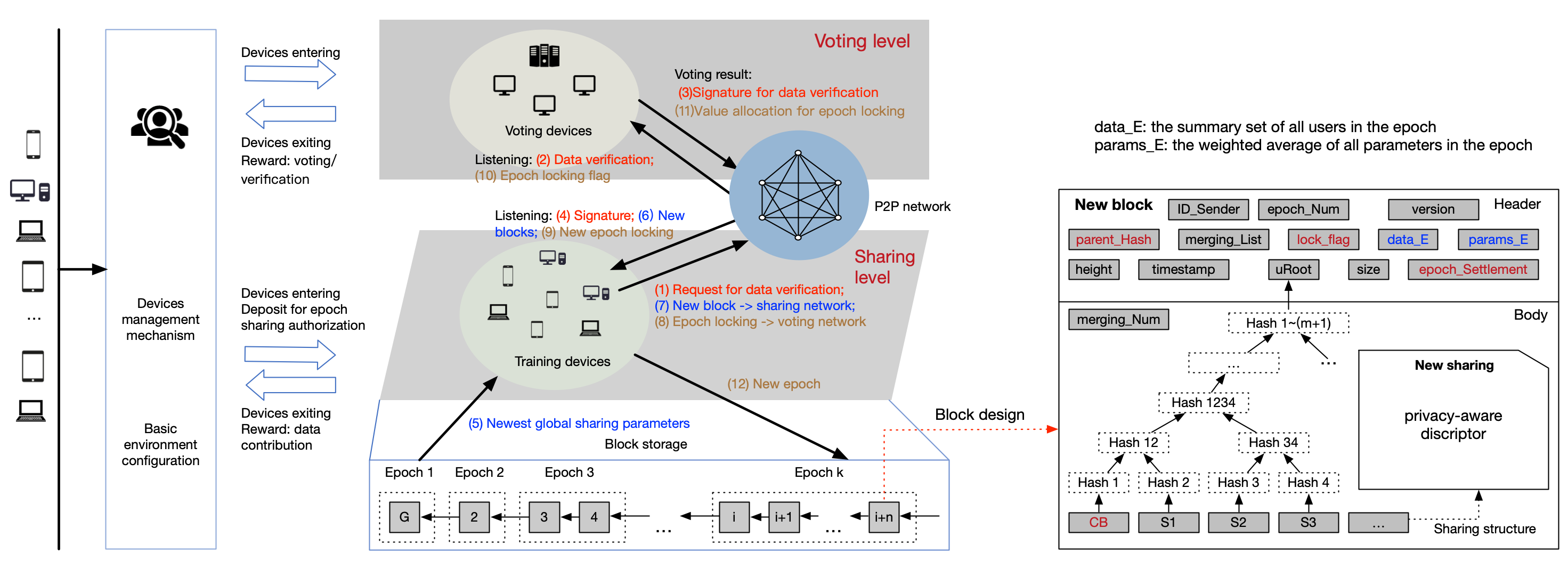

Dubbed as the new oil of our age, data has been universally recognized as the most important element in today’s economy. Yet the current global data economy is seriously flawed in a number of important aspects including privacy concerns, data valuation, data accounting, data pricing and data auditing. The solution to these long-neglected problems is to rigorously establish “data” as a new asset class and invest research effort into the whole bundle of problems lying at the intersection of data science and other domains which we collectively put under the umbrella of data asset governance. Furthermore, the nature of big data today entails an increasingly distributed setting where data from various sources would be contributed to achieve data intelligence in a collaborative manner such as federated learning which enables geo-distributed users to cooperatively train a common model. However, the security of distributed collaborative intelligence is increasingly being questioned, due to the malicious clients or central servers’ constant attacks to the common model or user privacy data. Meanwhile, with the boom of Bitcoin, “decentralization”, as an emerging way of data collaboration, has attracted increasing attention due to a trusted third party is no longer needed. It is therefore important to examine the corresponding challenges peculiar to decentralized data collaboration, including for example consistency, trust and incentive. To this effort, we propose a decentralized Byzantine fault-tolerant federated learning framework called FedBFL incorporating distributed ledger technologies with a novel partial asynchronous consensus called Proof of Data (i.e., PoD). The framework utilizes distributed ledger for the recordings of global model and model update exchange. To enable the PoD, we also devise an innovative incentive mechanism based on data contribution, which can effectively reduce the malicious attacks and adequately leverage the data characteristics of each node to train the model with superior generalization performance. We then discussed the scalability of FedBFT, especially the theoretical resilience and performance analysis of PoD.